Don't Let a Transition Year Slow You Down

Don't Let a Transition Year Slow You Down

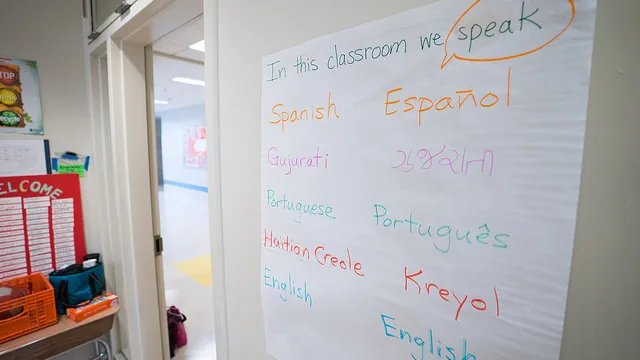

Driven by the adoption of more rigorous, language-rich content standards, states across the country are adopting new ELD standards and assessments to support English Language Learners. This focus on language development is mostly a good thing, as ELLs represent the fastest growing population of K-12 students, and the sub-group most likely to need more support as content standards evolve. But the transition to new ELP assessment models creates a lot of practical challenges. For example, how do ELL educators measure language proficiency growth during the critical transition year? And are correlation studies helpful tools for educators?

To answer this question, we decided to look in our own backyard. Here in Massachusetts, the Commonwealth decided to join the WIDA consortium for the 2012-13 school year. Before, educators in the state used a MEPA assessment to measure language proficiency; in the Winter/Spring of 2013, districts began administering the ACCESS assessment. The folks at the Department of Elementary and Secondary Education (DESE) conducted a correlation or bridge study to help educators understand how to link the results of the two assessments.

Here’s how they did it: For each grade level, DESE assigned each MEPA score to a percentile rank. They did the same thing for ACCESS scores, and then matched these percentile rankings. For example, a MEPA score of 497 and an ACCESS score of 317 were both mapped to the 65th percentile. This method of equipercentile linking sounds sensible, right? But there were a few important assumptions underlying the analysis:

- The student population who took the MEPA in 2012 had similar demographic characteristics as the students who took the ACCESS in 2013.

- The students would perform similarly on the two tests.

- The tests were similar in content and level of rigor.

I don’t know how you feel, but as a former teacher I know it is pretty difficult to create valid tests with similar content and rigor. While the correlation model seemed grounded in good logic, I was inclined to question the accuracy of the study.

As it turns out, we weren’t the only ones who felt this way. In fact, DESE themselves cautioned educators on how to view this study - urging that this bridge study be thought of as well-informed estimates and not precise measures between the two tests. They also asserted that these scores should not alone be used to make progress determinations for students.

To learn more, we spoke with some of our local partners to gain an on-the-ground perspective. What did ELL educators think of this study? Were they as skeptical as I was? It turns out – yes. Many did not use the correlating scores at all, and some others did not know much about the study in the first place. Those that did use the correlation crosswalk saw major gaps that reinforced doubts about the study’s validity. For example, cohorts that previously saw 50% of students showing progress now only had 7% of students showing progress – an unlikely fall-off in performance. Among the educators we’ve spoke with, there seemed to be two schools of thought:

- This study may hold some validity, but we won’t use the correlation scores alone to inform our instruction/reclassification decisions, or

- This data does not seem to give us a valid comparison so we’ll use other criteria in our decision making this year.

You can see from both of these that MA educators did not let this study hinder their service to ELs. Instead, it pushed them to use more and different data points.

Was Massachusetts wasting time and resources conducting this study? I’m not sure. Although the results were imperfect, they provided educators with a starting point to think about the new ACCESS scores and what they meant for their students. Truthfully, it may have been the best study possible under the circumstances, and it has had the effect – intended? – of re-focusing ELL professionals on other kinds of data to support decision making. That is a legacy that will be worth continuing even when multiple years of ACCESS scores can produce more reliable growth measures.

As your state ends a transition year or looks ahead to one in the future, I urge you to continue giving your ELs what they deserve. Waiting for a perfectly sound correlation study, or hesitating until you’ve perfected your understanding of revised standards, are no reasons to stop pushing on. Your work makes a difference in each of your student’s lives - don’t let a transition year slow you down.